Respondus Monitor: Understanding Proctoring Results

About Respondus Monitor

![]() Respondus Monitor is an automated proctoring system for LockDown Browser

Respondus Monitor is an automated proctoring system for LockDown Browser

![]() Respondus Monitor uses a webcam to record students during exams, providing a powerful deterrence to cheating

Respondus Monitor uses a webcam to record students during exams, providing a powerful deterrence to cheating

![]() It uses computer vision technology to flag events that might require closer review by the instructor

It uses computer vision technology to flag events that might require closer review by the instructor

Class Results

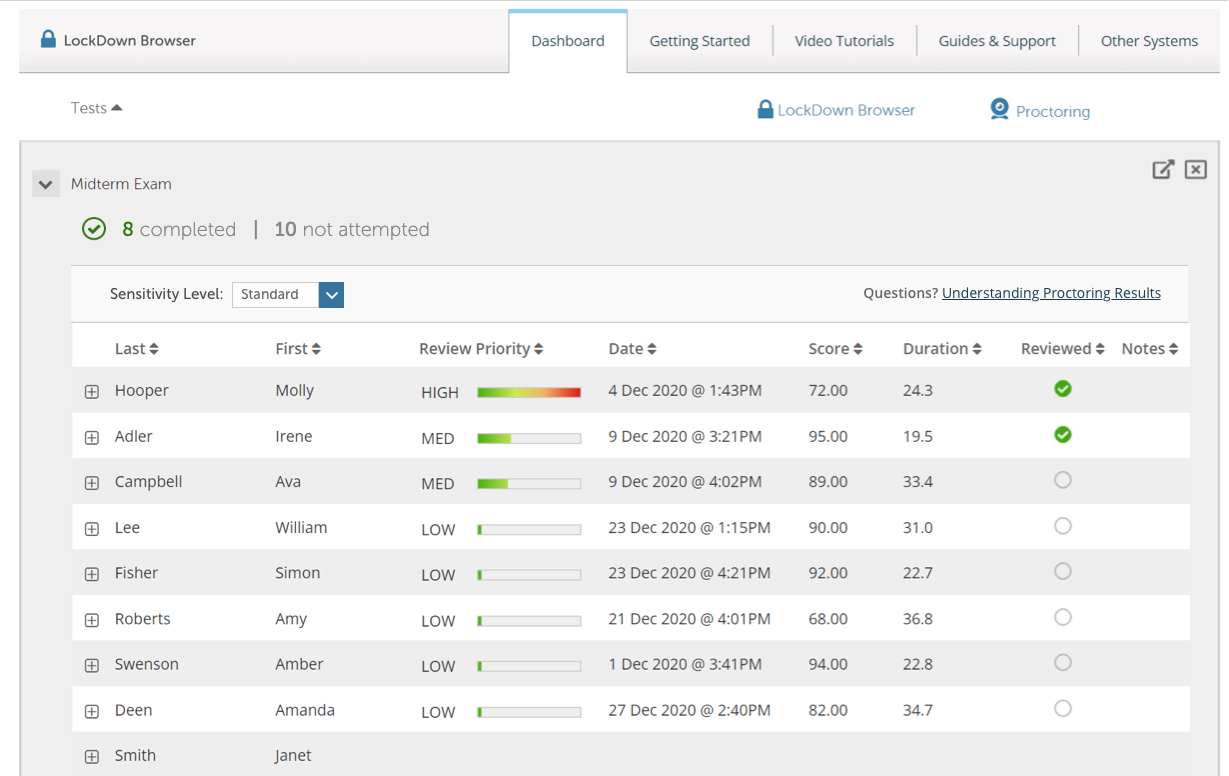

Once students have completed the exam, select the "Class Results" option from the LockDown Browser Dashboard. The class roster with summary data is shown here:

Review Priority is a composite measure that helps an instructor determine which exam sessions warrant a closer look. Results appear as Low, Medium or High, with a green-to-red bar that conveys the quantity and severity of flagged events.

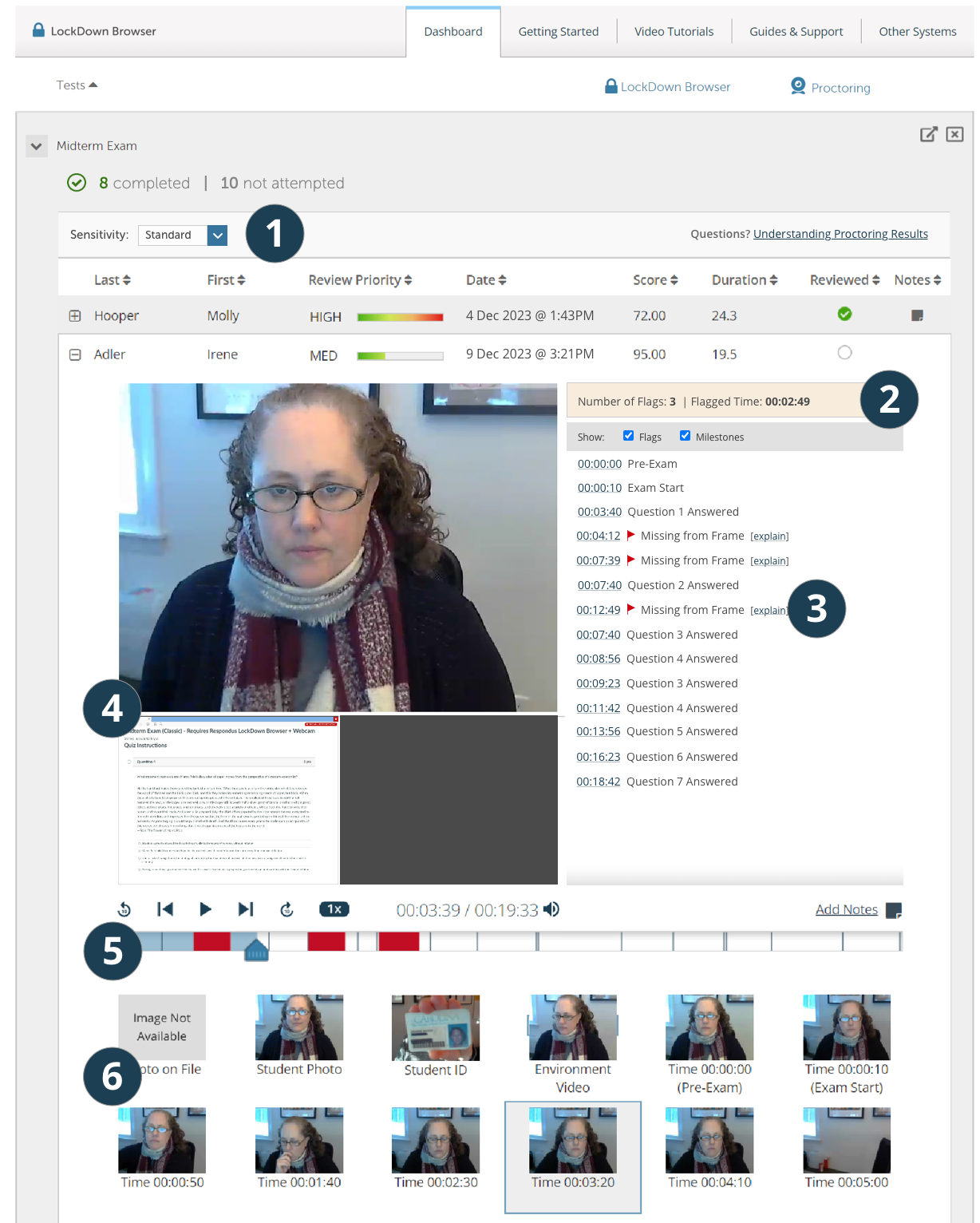

Use [+] to expand the details for a student:

1) Sensitivity (see explanation below) 2) Summary of key data, 3) List of Flags and Milestones (see explanation below), 4) Video playback and controls, 5) Timeline with flags (red) and milestones (blue), 6) Thumbnail images from video

Frequently Asked Questions

How is Review Priority Determined?

The Review Priority value is derived from three sources of data: The webcam video of the test taker, the computing device & network used for the assessment, and the student's interaction with the assessment itself.

More details about Review Priority

The webcam video is analyzed using computer vision technology, which is how flagged events like "Missing from Frame" and "Different person in frame" are generated.

Data from the computing device and network will generate events such as video interruptions, automatic restarts of a webcam session, attempts to switch applications, and more.

Data is also obtained from the student's interaction with the assessment, such as when the exam session starts and ends, when answers are saved, and if the student exited the exam early.

Review Priority is based on an analysis of the flagged events, including weights and other adjustments to the data.

What are Flags and Milestones?

Respondus Monitor generates a list of events from the exam session. "Flags" are events where a problem might exist, whereas "milestones" are general occurrences such as when the exam started, or when a question was answered.

List of Flags and Milestones

Flagged Events*

- Missing from Frame — the student could not be detected in the video frame for a period of time

- Different person in Frame — a different person from whom started the exam may have been detected in the video frame for a period of time

- Multiple persons in Frame — multiple faces are detected in the video for a period of time

- Partially Missing from Frame — the student’s face was partially missing from the frame for a period of time (This flag only appears when Strict sensitivity is selected.)

- An Internet interruption occurred — a video interruption occurred as a result of an internet failure

- Video frame rate lowered due to quality of internet connection — if a poor upload speed is detected with the internet connection, the frame rate is automatically lowered for the webcam video

- Student exited LockDown Browser early — the student used a manual process to terminate the exam session early; the reason provided by the student is shown

- Low Face Detection — face detection could not be achieved for a significant portion of the exam

- Multiple Bad Lighting Alerts

- Multiple Camera Position Alerts

- A webcam was disconnected — the web camera was disconnected from the computing device during the exam

- A webcam was connected — a web camera was connected to the computing device during the exam

- An attempt was made to switch to another screen or application — indicates an application-switching swipe or keystroke combination was attempted

- Video session terminated early — indicates the video session terminated unexpectedly, and that it didn't automatically reconnect before the exam was completed by the student

- Face Detection check failed (pre-exam) — face detection could not be achieved prior to the start of the exam

- Student alerted to failed face detection (during exam)

- Student warned about bad camera positioning — a warning that the face is being cropped in the video and to adjust the camera angle. This warning can occur before or during the exam.

- Student warned about bad lighting — a warning that the lighting needs to be adjusted because the face is not appearing clearly in the video. This warning can occur before or during the exam.

- Student turned off alerts — the student selected "Don't show this alert again" during the assessment for Face Detection, Camera Positioning, or Bad Lighting. The student does not receive alerts after this.

Milestone Events*

- Question X Answered — an answer to the question was entered (or changed) by the student

- Pre-Exam — the webcam recording that occurs between the environment check and the start of the exam

- Exam Start — the start of the exam

- End of Exam — the exam was submitted

* New flags and milestones are added periodically; this list isn't comprehensive.

What is Sensitivity?

Instructors can select a Sensitivity level (Strict, Standard, Relaxed) that best aligns with their exam requirements. Changing the sensitivity level affects flagged events and the Review Priority value. The flagging of “handheld devices,” such as calculators, can be suppressed by deselecting the option.

More about Sensitivity Levels

Sensitivity Levels:

Strict - Strict sensitivity adds the “Partially Missing” flag to indicate when the student’s eyes and/or portions of the face aren’t appearing in the proctoring video.

Standard - Standard sensitivity, which is the default setting, is for a typical exam environment where students have a frontal camera and are mostly looking in the direction of their screen.

Relaxed - Relaxed sensitivity relies less on face detection and more on whether a student is somewhere in the video frame. A Missing flag will still result if a person cannot be detected at all. Relaxed sensitivity is recommended for exams that permit students to use additional resources (notes, books, calculators etc.), write on paper, or generally, look away from their screen. It is also recommended when an instructor requires the webcam to be positioned with a side-view angle of the student.

More about the Handheld Device flag

Optional flag:

Handheld Device - A handheld device, such as a calculator or phone, was detected. Instructors can exclude these flags from the proctoring results by deselecting “Handheld devices” from the Sensitivity pull-down list.

Important Tips

1) Flags aren't cheating. Flagged events and Review Priority results don't determine whether a student has cheated or committed an exam rule violation. Rather, they are tools to help identify pre-determined events, anomalies, or situations that may warrant further examination by the instructor.

2) Facial detection is important. Several flagging events rely on facial detection. If a student's face is turned away from the webcam or heavily cropped in the video (e.g. you can only see the student's eyes and forehead), facial detection rates will drop. Other things that affect facial detection rates are baseball caps, backlighting, very low lighting, hands on the face, and highly reflective eye glasses.

3) "False positive" flags. If a student is flagged as "missing" but his/her face is clearly visible in the frame, this would be considered a false positive. Our goal is to reduce false positive flags as much as possible, without missing the "true positive" events. It's not a perfect science — but we are working toward that.

4) Garbage in, garbage out. You can achieve significant improvement with automated flagging by having students produce better videos. Provide these guidelines to students to help them create high-quality videos that result in fewer flags.

- Avoid wearing baseball caps or hats that extend beyond the forehead

- If using a notebook computer, place it on a firm surface like a desk or table, not your lap.

- If the webcam is built into the screen, avoid making screen adjustments after the exam starts. A common mistake is to push the screen back, resulting in only the top portion of the face being recorded.

- Don't lie down on a couch or bed while taking an exam. There is a greater chance you'll move out of the video frame or change your relative position to the webcam.

- Don't take an exam in a dark room. If the details of your face don't show clearly during the webcam check, the automated video analysis is more likely to flag you as missing.

- Avoid backlighting situations, such as sitting with your back to a window. The general rule is to have light in front of your face, not behind your head.

- Select a distraction-free environment for the exam. Televisions and other people in the room can draw your attention away from the screen. Other people that come into view of the webcam may also trigger flags by the automated system.

5) Continual improvements. Respondus Monitor is foremost a deterrent to cheating. The goal is to provide "meaningful results" to instructors so they can quickly identify areas of the exam session that require closer scrutiny. Respondus Monitor is continually being enhanced so that both students and instructors can enjoy the benefits of online learning and exam integrity.

More Questions?

Schedule a 15-minute call with a Respondus trainer. Get answers to your questions about online proctoring with Respondus Monitor.

Do you prefer email? Send your question to [email protected] and we will get back to you quickly.

Have a technical issue? Submit a support ticket.